Onboard GCP to Yotascale

To onboard your GCP project to Yotascale, take these steps in your GCP console and then go to the Yotascale dashboard to enter the parameters.

GCP Console Steps Required

The overall steps to provide Yotascale access to your Cost Usage, Recommendations, and Container metrics data.

Take these steps to give Yotascale access to your Cost Usage, Recommendations, and Container metrics data.

Your Project must host the cost export data in a BigQuery and allow it to export. And you use a bucket/prefix to give read access to Yotascale.

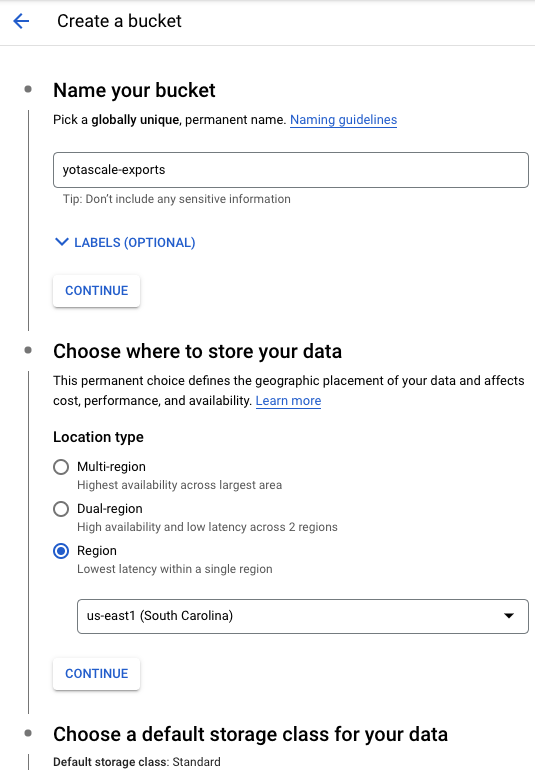

Step 1: Create a GCS Bucket for the shared the cost data export

Create the GCS bucket in the same project that you use to provide the detail usage export from Billing.

Select the Project Detailed Billing Export.:

From the left sidebar, go to Cloud Storage then select the Buckets tab.

To add a bucket, click + CREATE. We recommend that you use a dedicated bucket to allow Yotascale to read cost data.

Tip: Select a single location and a low-cost location, such as us-east1, using Standard Class.

Tip: Use a name that’s easy to remember and associate with Yotascale, such as yotascale-exports.

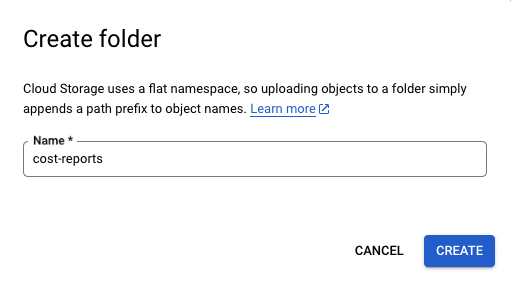

Create a folder for the data exports and enter the name cost-reports.

Click CREATE.

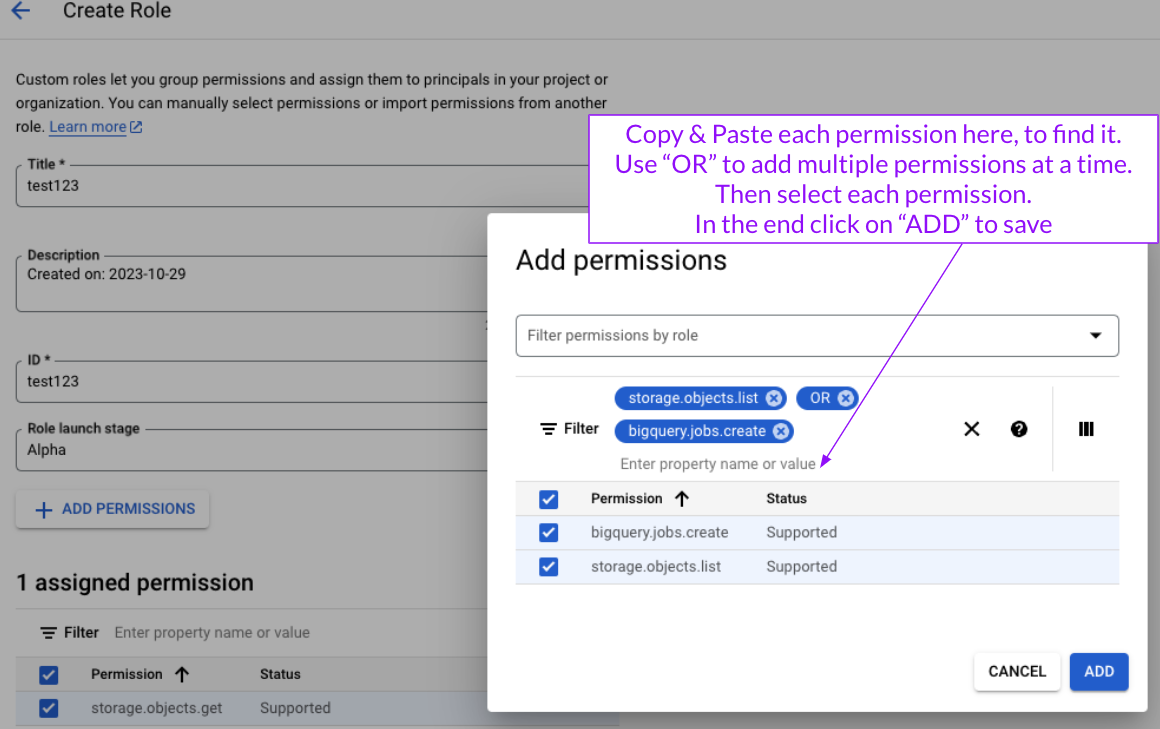

Step 2: Create a custom role for Yotascale

Create a role with read-only access to your GCP cost and usage details and the GCP recommendations.

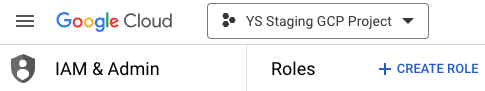

From the main menu, go to IAM & Admin.

Select the Project Detailed Billing Export.

Click Roles, and then + CREATE ROLE.

Enter the title Yotascale and the ID yotascale for the roles.

Click + ADD PERMISSIONS so that Yotascale can:

Read the export data from the GCS bucket.

Read the Recommendations export from BigQuery.

Match the cost usage resource ID to the recommendations resource ID. GCP does not provide mapping directly in the cost export file. GCP performs matching by using the metadata from the resource ID.

Tip: To add multiple permissions, enter OR between each permission. Or you can add one permission at a time.

Required permissions:

storage.objects.liststorage.objects.getbigquery.jobs.createbigquery.capacityCommitments.getbigquery.tables.getcompute.addresses.getcompute.disks.getcompute.images.getcompute.instanceGroupManagers.getcompute.instances.getAdded the 10 permissions.

Click CREATE to save the role.

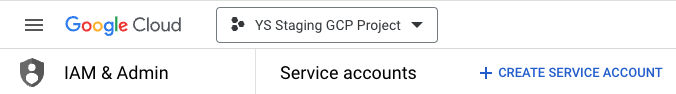

Step 3: Create a service account for Yotascale

Create a service account and attach the custom role to it.

From the IAM & Admin dashboard, click Service accounts.

Make sure the project containing the BigQuery billing export and GCS bucket is selected.

Click + CREATE SERVICE ACCOUNT.

Give it an easy to find name such as Yotascale Service Account.

Click CREATE AND CONTINUE.

From Select a role, search for the role that you created in the previous step. In the example, it’s Yotascale. Step 3 is not required.

Click DONE.

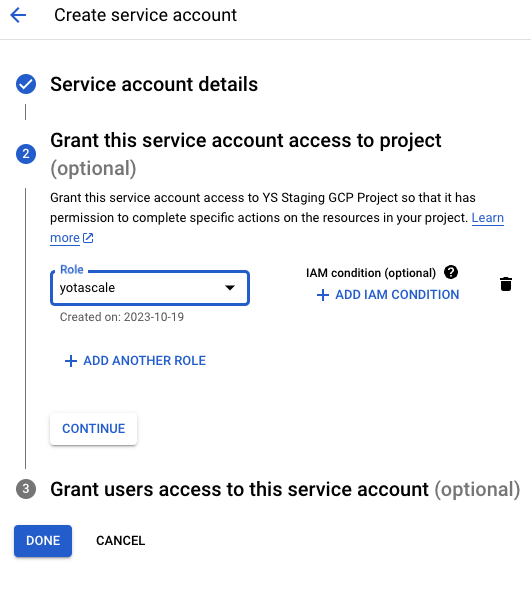

Tip: If you clicked DONE without adding the role, you can go to the IAM menu to search for the Yotascale Service Account and add or modify the role there.

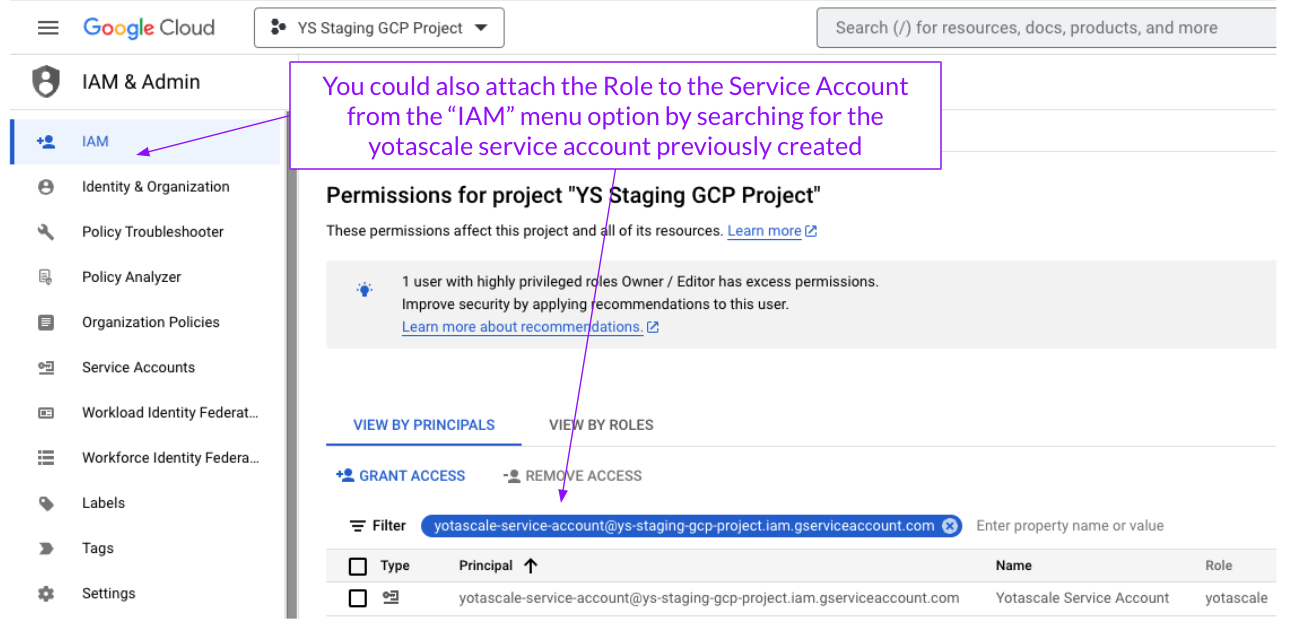

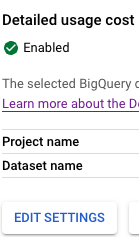

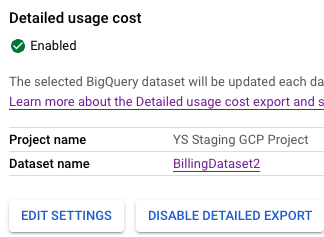

Step 4: Enable billing export to a BigQuery dataset

From the GCP Billing Account dashboard, click on Billing export.

PLEASE make sure you enable the “Detailed usage cost”. The detailed version is required for comprehensive allocation of costs and recommendations.

Click EDIT SETTINGS

Choose a project that contains the billing data to export.

Give your dataset a name, for example, BillingDataset2.

The Dataset must be in the US multi-region or EU multi-region for full export.

Step 5: Wait for the dataset table to be created if new

Creating the Dataset table can take up to 24 hours. The Detailed Usage Report service automatically creates the table and saves new usage data daily, as described in this article:

https://cloud.google.com/billing/docs/how-to/export-data-bigquery-tables/detailed-usage

The table with the detailed usage data is:

gcp_billing_export_resource_v1_<BILLING_ACCOUNT_ID>

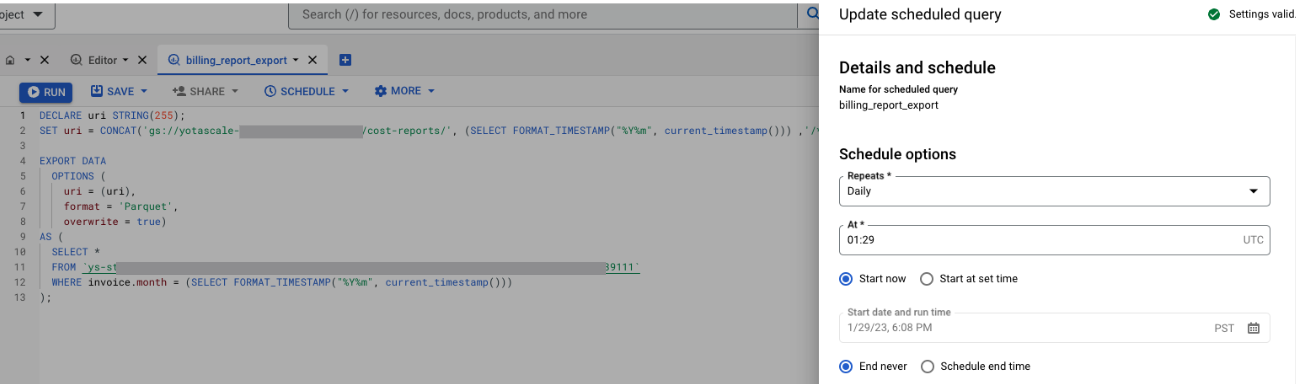

Step 6: Create a scheduled BigQuery job to export billing costs for the current month

Click the dataset name. In this example, BillingDataset2.

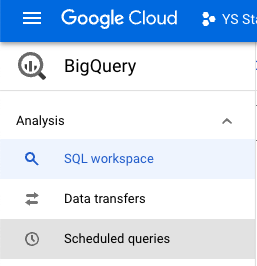

From the BigQuery menu select Scheduled queries.

Click + CREATE SCHEDULED QUERY IN EDITOR.

Add this query. Be sure to replace

$$BUCKET-NAME$$and$$PROJECT.DATASETNAME.TABLE$$with the names that you created in previous steps. Be sure to replace the bucket name with your created bucket in Step 1.

For Example:ys-staging-gcp-project.BillingDataset2.gcp_billing_export_resource_v1_018011_9F9A8D_XXXXX

which means:<YOUR_PROJECT_NAME>.<YOUR_DATASET_NAME>.gcp_billing_export_resource_v1_<BILLING_ACCOUNT_ID>CODE:

- CODE

DECLARE uri STRING(255); SET uri = CONCAT('gs://$$BUCKET-NAME$$/cost-reports/', (SELECT FORMAT_TIMESTAMP("%Y%m", current_timestamp())) ,'/*.parquet'); EXPORT DATA OPTIONS ( uri = (uri), format = 'Parquet', overwrite = true) AS ( SELECT * FROM `$$PROJECT.DATASETNAME.TABLE$$` WHERE invoice.month = (SELECT FORMAT_TIMESTAMP("%Y%m", current_timestamp())) LIMIT 9223372036854775807 );Note: the

LIMIT 9223372036854775807is required because GCP automatically aggregates costs into a single file for multiple days if the size is small, which could lead to double counting

COPY

Click Schedule - update scheduled query and select Daily.

Set the query to run in the early morning, such as at 01:20 UTC.

To continue running the query, select End never.

Step 7: Create a second scheduled BigQuery job to export billing costs for the previous month

GCP makes small updates to cost data up to three days after month-end to reconcile numbers or corrections due to usage that is processed later.

The first export query from Step 6 copies data for the current month. It doesn’t copy the data for the last day of the month, because that’s considered yesterday's data. On the last day, the current day is not yet over when the query runs.

Add a second query that adds the last day of the month and updates values when they arrive up to three days late.

From the BigQuery menu select Scheduled queries.

Click + CREATE SCHEDULED QUERY IN EDITOR

Enter this query to replace the bucket name with your created bucket from Step 1.

- Replace$$BUCKET-NAME$$with the bucket name you create in Step 1

- Replace the$$PROJECT.DATASETNAME.TABLE$$with your correct name from above:

For example:ys-staging-gcp-project.BillingDataset2.gcp_billing_export_resource_v1_018011_9F9A8D_XXXXX

which means:<YOUR_PROJECT_NAME>.<YOUR_DATASET_NAME>.gcp_billing_export_resource_v1_<BILLING_ACCOUNT_ID>CODE:

- CODE

DECLARE target_timestamp TIMESTAMP; DECLARE target_billing_month STRING(50); DECLARE uri STRING(255); SET target_timestamp = CAST(DATE_SUB(CAST(current_timestamp() AS DATE), INTERVAL 1 MONTH) AS TIMESTAMP); SET target_billing_month = FORMAT_TIMESTAMP("%Y%m", target_timestamp); SET uri = CONCAT('gs://$$BUCKET-NAME$$/cost-reports/', target_billing_month ,'/*.parquet'); EXPORT DATA OPTIONS ( uri = (uri), format = 'Parquet', overwrite = true) AS ( SELECT * FROM `$$PROJECT.DATASETNAME.TABLE$$` WHERE invoice.month = target_billing_month LIMIT 9223372036854775807 ); COPY

Click Schedule, then update the scheduled query.

Select Monthly and on 1,2,3.

Select Never Ends.

Enter an early morning start time, such as 03:00 UTC.

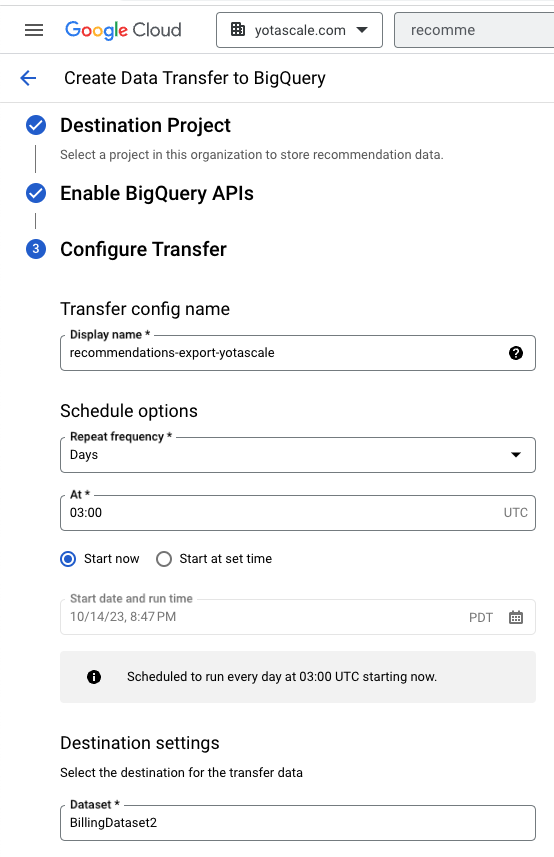

Step 8: Enable recommendations export

Let’s create an export of your GCP Recommendations to BigQuery to share with Yotascale. This will allow us to map the cost saving recommendation to costs based on cost categories, like compute, database, storage and us resource IDs to match business contexts to its identified saving opportunities.

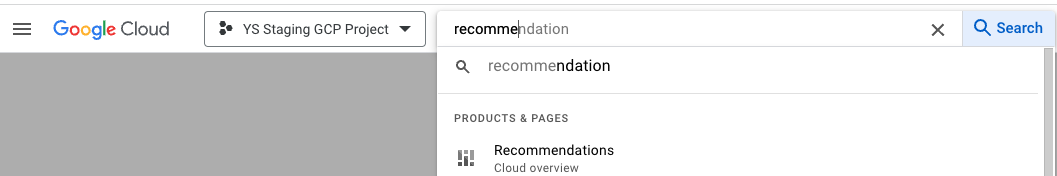

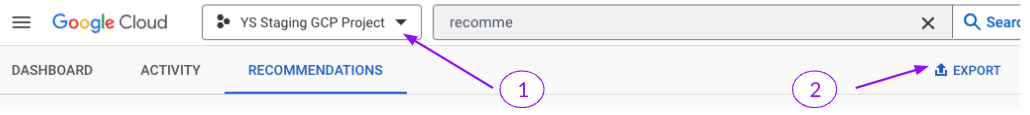

Search for recommendation:

Select your GCP Project, the one that holds the BigQuery you are sharing with Yotascale (1) then click Export (2)

Enter the name, schedule it , and select the Dataset you defined before you got access to Yotascale.

Save. You’re done with creating a scheduled recommendation export.

Step 9: Configure your GKE containers to export usage metrics to Yotascale

Yotascale uses Prometheus to ingest GKE metrics and provide cost allocation via:

Cluster Name

Namespace

Container Labels

Workload Type

To enable the GKE metrics required, run the download and run the Yotascale agent in each of your clusters. Follow these steps:

Login to Yotascale to download the template with agent configuration from the Settings > Cloud Connections > New Connection > GKE

Here are additional instructions (in case you are not familiar with installing a Prometheus agent)

Repeat these steps for each cluster.

Steps required on the Yotascale dashboard

Now that the GCP is exporting detailed cost and usage data to your GCP bucket, you can give Yotascale access to that bucket.

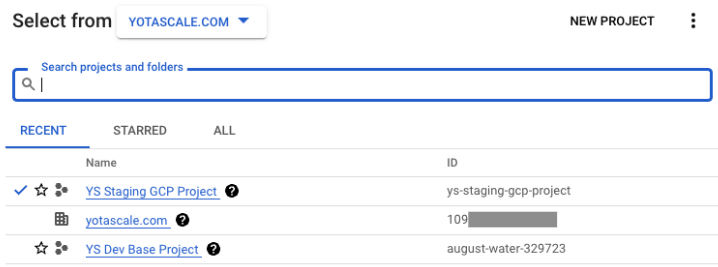

Step 1: Create a new cloud connection on the Yotascale dashboard

Login to the Yotascale dashboard: https://next.yotascale.io

Go to Settings - Cloud Connections

Click + NEW CONNECTION.

Select GCP Billing Account.

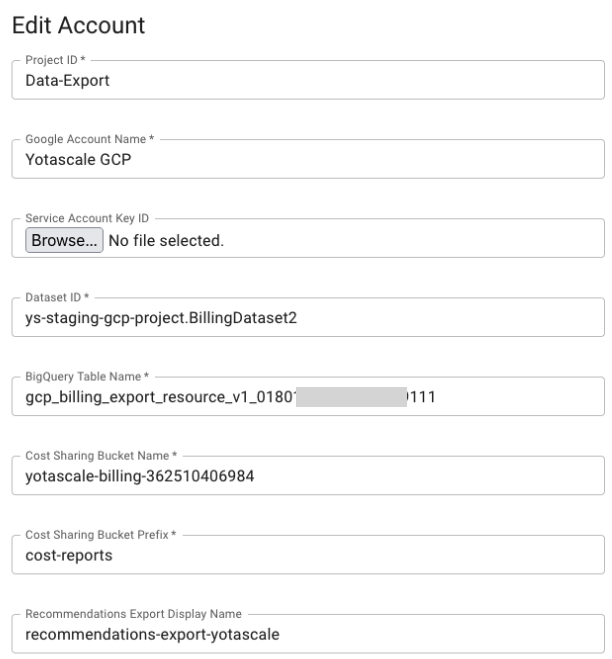

In the Project ID section, select your GCP project ID from the console.

Enter a Google Account Name to be able to recognize the connection, such as Production Account.

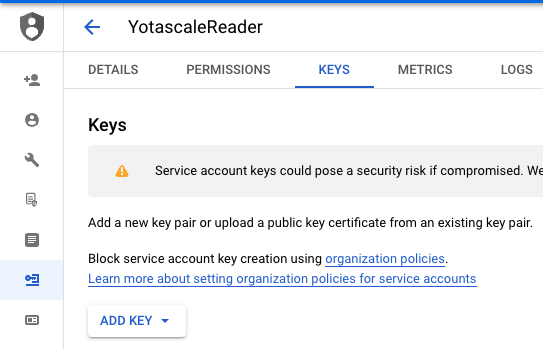

To use Service Account ID, download a service account key from the GCP console.

Go to the Service Account that you created in the GCP console.

Click Keys.

Click ADD KEY.

A JSON file is saved automatically from your browser.

To upload this file, select Choose File.

Enter the rest of the parameters that you created in the GCP console, for example:

Click Save

Step 2: Inform Yotascale that you onboarded your GCP account and GKE clusters

Let Yotascale know that you completed the onboarding steps so that cost processing can begin and make your data visible in Yotascale.

GCP takes about a day to run the cost and usage billing export into BigQuery. On the day that you onboard, Yotascale may not yet be able to process your cost data because it is not yet exported.